The term introduction of robot means different things to different people. Science fiction books and movies have strongly influenced what many people expect a robot to be or what it can do. Sadly the practice of robotics is far behind this popular conception. One thing is certain though robotics will be an important technology in this century. Products such as vacuum cleaning robots have already been with us for over a decade and self driving cars are coming. These are the vanguard of a wave of smart machines that will appear in our homes and workplaces in the near to medium future.

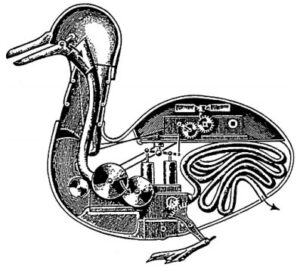

In the eighteenth century the people of Europe were fascinated by automata such as Vaucanson’s duck shown in Fig. These machines, complex by the standards of the day, demonstrated what then seemed life-like behavior. The duck used a cam mechanism to sequence its movements and Vaucanson went on to explore mechanization of silk weaving. Jacquard extended these ideas and developed a loom, shown in Fig. that was essentially a programmable weaving machine. The pattern to be woven was encoded as a series of holes on punched cards. This machine has many hallmarks of a modern robot: it performed a physical task and was reprogrammable.

The term robot first appeared in a 1920 Czech science fiction play “Rossum’s Universal Robots” by Karel capek (pronounced Chapek). The term was coined by his brother Josef, and in the Czech language means serf labor but colloquially means hardwork or drudgery. The robots in the play were artificial people or androids and as in so many robot stories that follow this one, the robots rebel and it ends badly for humanity. Isaac Asimov’s robot series, comprising many books and short stories written between 1950 and 1985, explored issues of human and robot interaction and morality.

The robots in these stories are equipped with “positronic brains” in which the “Three laws of robotics” are encoded. These stories have influenced subsequent books and movies which in turn have shaped the public perception of what robots are. The mid twentieth century also saw the advent of the field of cybernetics an uncommon term today but then an exciting science at the frontiers of understanding life and creating intelligent machines.

The first patent for what we would now consider a robot was fi led in 1954 by George C. Devol and issued in 1961. The device comprised a mechanical arm with a gripper that was mounted on a track and the sequence of motions was encoded as magnetic patterns stored on a rotating drum. The first robotics company Unimation, was founded by Devol and Joseph Engelberger in 1956 and their first industrial robot shown in Fig. was installed in 1961.

a Vaucanson’s Duck (1739)

The original vision of Devol and Engelberger for robotic automation has become a reality and many millions of arm type robots such as shown in Fig. have been built and put to work at tasks such as welding, painting, machine loading and unloading, electronic assembly, packaging and palletizing. The use of robots has led to increased productivity and improved product quality. Today many products we buy have been assembled or handled by a robot.

Unimation Inc. (1956–1982). Devol sought fi nancing to develop his unimation technology and at a cocktail party in 1954 he met Joseph Engelberger who was then an engineer with Manning, Maxwell and Moore. In 1956 they jointly established Unimation, the first robotics company, in Danbury Connecticut. The company was acquired by Consolidated Diesel Corp. (Condec) and became Unimate Inc. a division of Condec. Their first robot went to work in 1961 at a General Motors die-casting plant in New Jersey. In 1968 they licensed technology to Kawasaki Heavy Industries which produced the first Japanese industrial robot. Engelberger served as chief executive until it was acquired by Westinghouse in 1982. People and technologies from this company have gone on to be very influential on the whole field of robotics.

George C. Devol, Jr. (1912–2011) was a prolifi c American inventor. He was born in Louisville, Kentucky, and in 1932 founded United Cinephone Corp. which manufactured phonograph arms and amplifi ers, registration controls for printing presses and packaging machines. In 1954, he applied for US patent 2,988,237 for Programmed Article Transfer which introduced the concept of Universal Automation or “Unimation”. Specifi cally it described a track-mounted polar coordinate arm mechanism with a gripper and a programmable controller – the precursor of all modern robots.

In 2011 he was inducted into the National Inventors Hall of Fame. (Photo on the right: courtesy of George C. Devol)

These first generation robots are fixed in place and cannot move about the factory they are not mobile. By contrast mobile robots as shown in Figs. and 1.5 can move through the world using various forms of mobility. They can locomote over the ground using wheels or legs, fly through the air using fixed wings or multiple rotors, move through the water or sail over it. An alternative taxonomy is based on the function that the robot performs. Manufacturing robots operate in factories and are the technological descendents of the first generation robots. Service robots supply services to people such as cleaning, personal care, medical rehabilitation or fetching and carrying as shown in Fig. Field robots, such as those shown in Fig, work outdoors on tasks such as environmental monitoring, agriculture, mining, construction and forestry. Humanoid robots such as shown in Fig. have the physical form of a human being they are both mobile robots and service robots.

Cybernetics, artificial intelligence and robotics. Cybernetics flourished as a research field from the 1930s until the 1960s and was fueled by a heady mix of new ideas and results from neurology, control theory and information theory. Research in neurology had shown that the brain was an electrical network of neurons. Harold Black, Henrik Bode and Harry Nyquist at Bell Labs were researching negative feedback and the stability of electrical networks, Claude Shannon’s information theory described digital signals, and Alan Turing was exploring the fundamentals of computation. Walter Pitts and Warren McCulloch proposed an artificial neuron in 1943 and showed how it might perform simple logical functions. In 1951 Marvin Minsky built SNARC (from a B24 autopilot and comprising 3000 vacuum tubes) which was perhaps the first neural network based learning machine as his graduate project. William Grey Walter’s robotic tortoises showed life-like behavior. Maybe an electronic brain could be built!

So what is a robot? There are many definitions and not all of them are particularly helpful. A definition that will serve us well in this book is a goal oriented machine that can sense, plan and act.

A robot senses its environment and uses that information, together with a goal, to plan some action. The action might be to move the tool of an arm-robot to grasp an object or it might be to drive a mobile robot to some place.

Sensing is critical to robots. Proprioceptive sensors measure the state of the robot itself: the angle of the joints on a robot arm, the number of wheel revolutions on a mobile robot or the current drawn by an electric motor. Exteroceptive sensors measure the state of the world with respect to the robot. The sensor might be a simple bump sensor on a robot vacuum cleaner to detect collision.

It might be a GPS receiver that measures distances to an orbiting satellite constellation, or a compass that measures the direction of the Earth’s magnetic field vector relative to the robot. It might also be an active sensor that emits acoustic, optical or radio pulses in order to measure the distance to points in the world based on the time taken for a reflection to return to the sensor.

A camera is a passive device that captures patterns of optical energy reflected from the scene. Our own experience is that eyes are a very effective sensor for recognition, navigation, obstacle avoidance and manipulation so vision has long been of interest to robotics researchers. An important limitation of a single camera, or a single eye, is that the 3-dimensional structure of the scene is lost in the resulting 2-dimensional image. Despite this, humans are particularly good at inferring the 3-dimensional nature of a scene using a number of visual cues.

Robots are currently not as well developed. Figure shows some very early work on reconstructing a 3-dimensional wireframe model from a single 2-dimensional image and gives some idea of the difficulties involved. Another approach is stereo vision where information from two cameras is combined to estimate the 3-dimensional structure of the scene this is a technique used by humans and robots, for example, the Mars rover shown in Fig. has a stereo camera on its mast.

| Read More Topics |

| Traditional and mechatronics design |

| Mechatronics system design |